Continuous Deployment of AWS Batch with CircleCI

At Blinkist, we use AWS Batch to spin up compute instances to process some of our assets when they get uploaded or updated. When I started working with AWS Batch for the 1st time last year, I couldn't find any information about how to continuously deploy changes to an AWS Batch Job with our CI/CD service CircleCI. It took us a bit to figure out a way to do it nicely, and in case you found a better way to do it, feel free to share it with us, please!

A very brief introduction to AWS Batch

To understand which parts need to be automatically updated when the Docker Image (containing our processing code) changes, we need a bit of basic knowledge about AWS Batch.

I won't go into details about when or how to use AWS Batch, there are plenty of blog posts about that. And I also won't write about how to continuously test your Docker Image, since that setup can be different, depending on the language you use or what your code does.

An AWS Batch Job contains the following components:

A Docker Image that contains the processing code. It can be hosted e.g. on Docker Hub or (like we do) on AWS Elastic Container Registry (ECR).

The Compute Environment lets you configure the compute instances for your jobs. It's like when you buy a new computer and specify how much memory and CPU it should have. Only this machine lives virtually in the cloud, you can buy as many as you need to get your job done and then return them when you don't need them anymore.

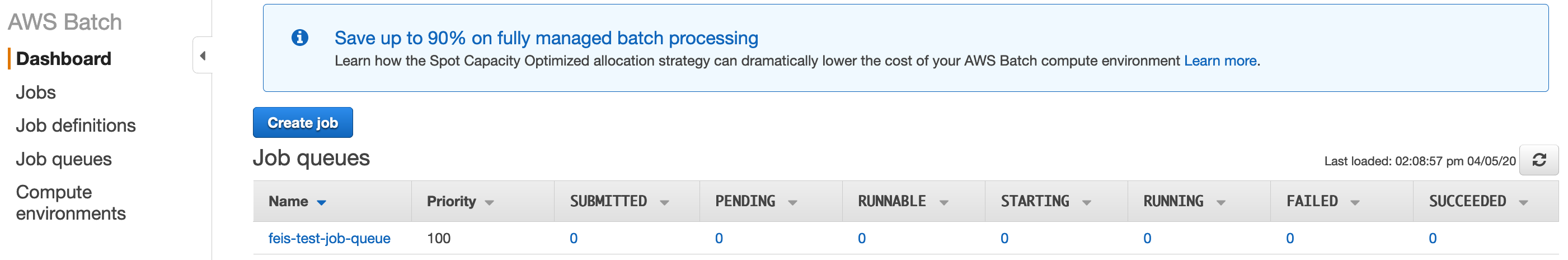

The Job Queue is where your jobs wait to be processed. AWS uses the Compute Environment to spin up EC2 instances and then distributes queued jobs to the instances with matching capacity. Jobs in the queue can have different states like SUBMITTED, RUNNING or FAILED.

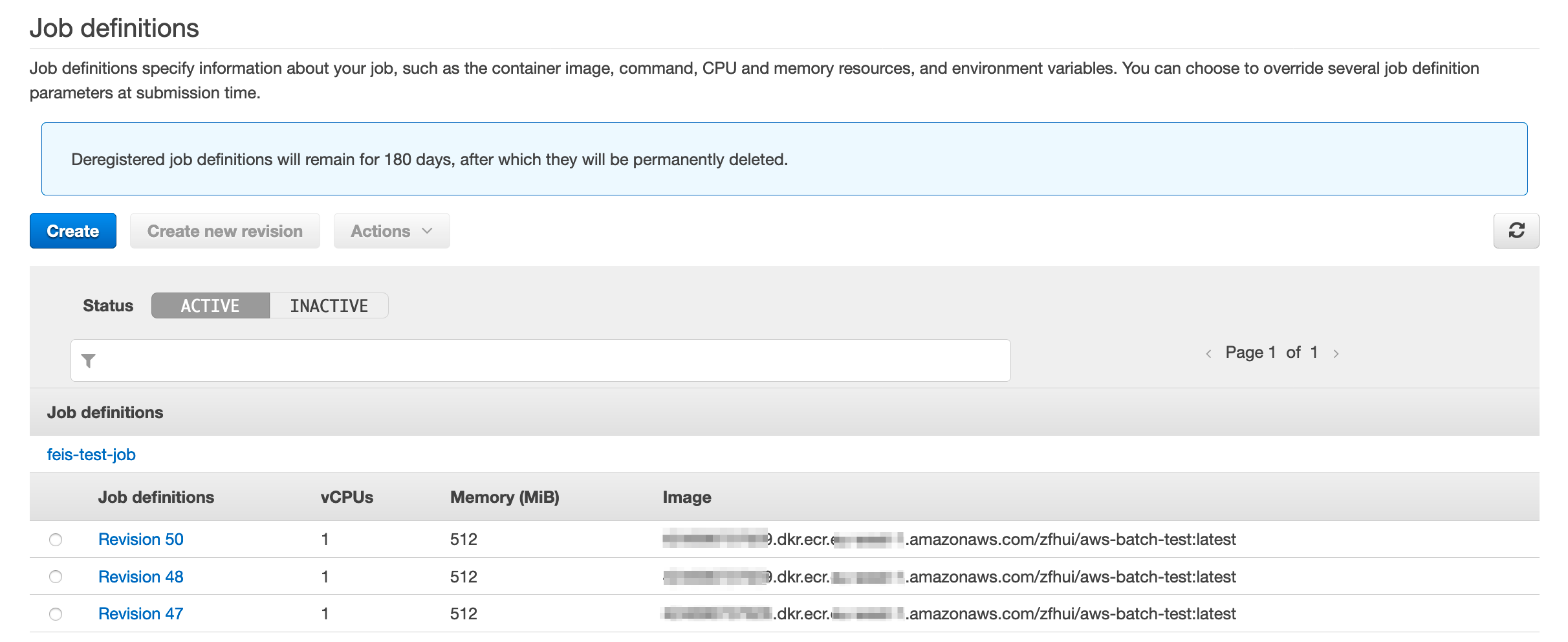

The Job Definition gives each of your jobs the necessary configurations to be started, processed and terminated. Here, you define e.g. how many resources each of your jobs can occupy; it needs the proper IAM role attached to it, which has all the necessary policies to run the processing code (e.g. read and upload files to S3 buckets); and the URI to the Docker Image and the command to start the processing code in the Docker Image.

One important thing to know about the Job Definitions is, when you want to change it (e.g. to change the version of your Docker Image), you need to create a new revision of it, which will then bump up the version of that Job Definition by 1. This will play an important role later when we write our deployment script.

The last component in this list is the Job itself. There are multiple ways to submit a job, but you'll need a Job Definition (including its version), a Job Queue and a name for your Job.

An example use case

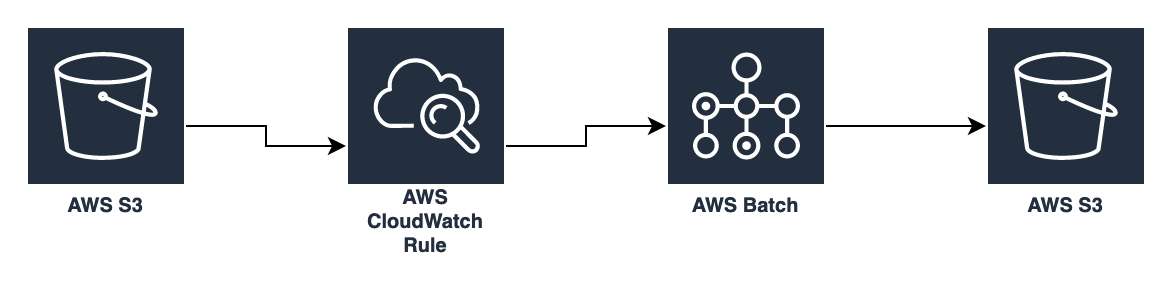

Here is a very simple use case to hopefyly make things a bit more clear. I'll use this to demonstrate the parts of our CD:

- an image file gets uploaded into an AWS S3 bucket

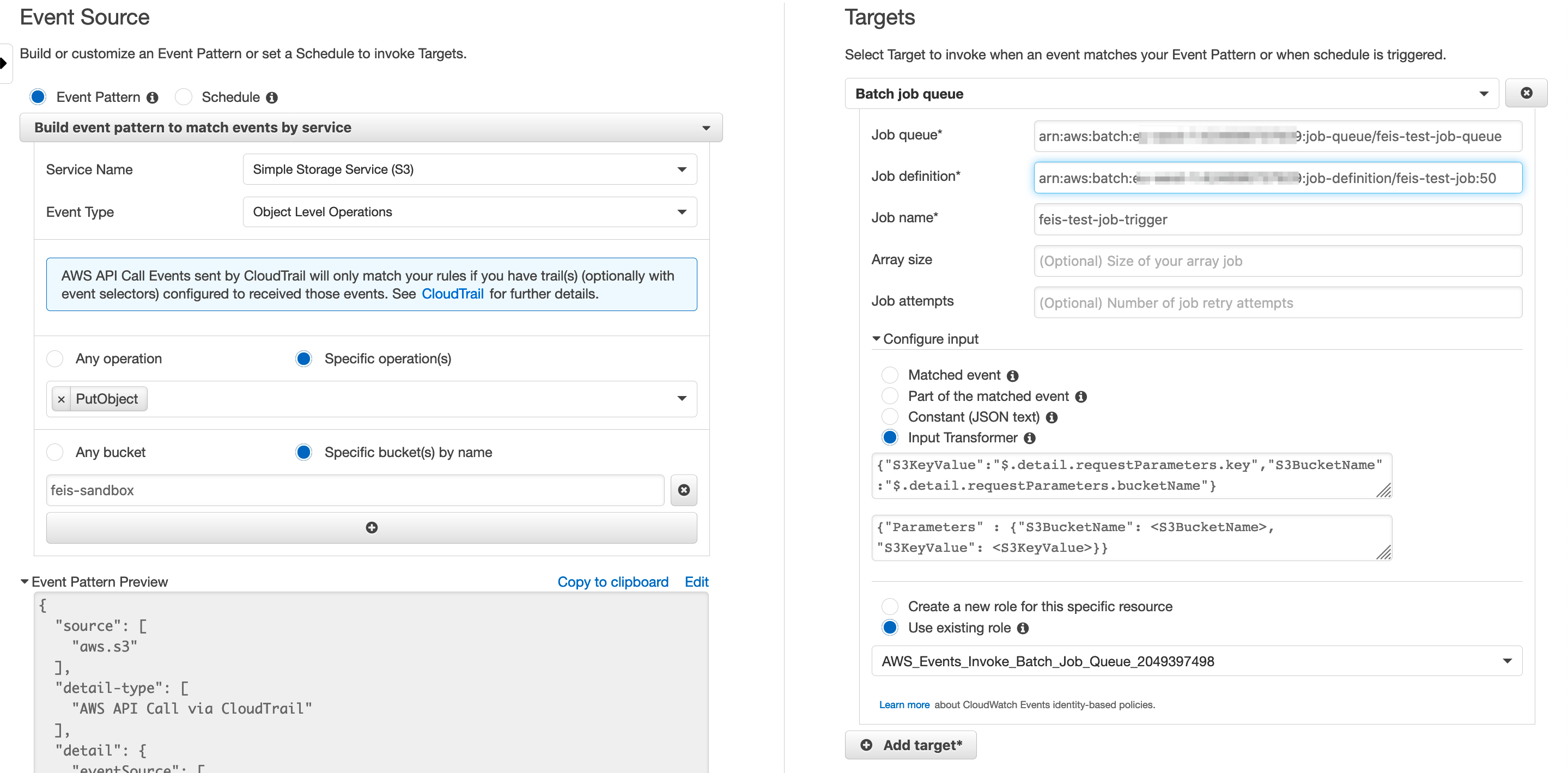

- the upload event triggers a pre-defined AWS CloudWatch Events Rule, which submits a new AWS Batch Job and passes along that image file's bucket-name and key to the job as parameters.

- the new job lands in the AWS Batch Job's job queue, AWS spins up an EC2 instance running the Docker Image configure by the Job's Definition, processes the job and (as part of our example use case) creates different resolutions of the images from upload S3 bucket and saves them into another S3 bucket, where they can then be used further

Continuous deployment

As mentioned earlier, whenever we create a new version of the Docker Image, we need to create a new version of the Job Definition pointing to the new Docker Image. And for our use case above, whenever we update the Job Definition's version, we'll also update the CloudWatch Events Rule to point to that new version.

This means, our CD will need to take care of the following 3 steps:

- build the new Docker Image, tag it with a new version and push it to ECR

- create a Job Definition using the new Docker Image version

- update the CloudWatch Events Rule to target the new Job Definition

With these 3 steps in mind, it's probably not a surprise that our CircleCI workflow looks something like this:

# .circleci/config.yml

version: 2.1

workflows:

version: 2

test_and_build_and_deploy:

jobs:

- specs

- build_and_push_image:

requires:

- specs

filters:

branches:

only:

- main

- develop

- update_batch_job_definition_dev:

requires:

- build_and_push_image

filters:

branches:

only:

- develop

- update_batch_job_definition_prod:

context: Github

requires:

- build_and_push_image

filters:

branches:

only:

- main

We're updating the Batch Job's Definition and the CloudWatch Events Rule in the same step update_batch_job_definition because we need the results from the Batch Job update for the CloudWatch Events Rule update. I didn't find a way to pass shell variables between different CircleCI shell sessions.

And because we use different AWS accounts for development and production, I needed to distinguish between deployments from the develop branch from the main branch.

Now let's look at what each of these steps does in more detail...

Build and push a new Docker Image

We used the CircleCI aws-ecr and aws-cli Orbs to simplify the AWS CLI configurations. You can of course also install and add your credentials and profiles on your own, if you don't rely on 3rd party Orbs.

orbs:

aws-ecr: circleci/aws-ecr@6.5.0

aws-cli: circleci/aws-cli@0.1.17

For the new Docker Image's version, we use the SHA1 of the last GIT commit of the current build. CirlceCI gives us access to it via the env variable CIRCLE_SHA1. And because we don't want to build the same image twice for develop and main branch, we check if a Docker Image with that version already exists and only build, tag and push it, when it's not the case.

Environmental variables like BATCH_DEPLOY_ACCESS_KEY_ID, BATCH_DEPLOY_SECRET_ACCESS_KEY, AWS_ECR_REGION, AWS_ECR_ACCOUNT_URL and AWS_ECR_REPO were created first in AWS and then added to CircleCI's project's settings.

jobs:

build_and_push_image:

executor: aws-ecr/default

steps:

- checkout

- aws-cli/setup:

aws-access-key-id: BATCH_DEPLOY_ACCESS_KEY_ID

aws-secret-access-key: BATCH_DEPLOY_SECRET_ACCESS_KEY

aws-region: AWS_ECR_REGION

- aws-ecr/ecr-login:

region: AWS_ECR_REGION

- run:

name: Build and push Image

command: |

EXISTING_IMAGES=$(aws ecr list-images \

--repository-name $AWS_ECR_REPO \

--query imageIds[*].imageTag)

if echo $EXISTING_IMAGES | grep -q $CIRCLE_SHA1 && echo $?; then

echo "Docker image with tag $CIRCLE_SHA1 exists, no need to build it again."

else

echo "Docker image with tag $CIRCLE_SHA1 doesn't exist, let's build it."

docker build -t $AWS_ECR_REPO:$CIRCLE_SHA1 .

docker tag $AWS_ECR_REPO:$CIRCLE_SHA1 $AWS_ECR_ACCOUNT_URL/$AWS_ECR_REPO:$CIRCLE_SHA1

docker push $AWS_ECR_ACCOUNT_URL/$AWS_ECR_REPO:$CIRCLE_SHA1

fi

Update Batch Job Definition and CloudWatch Events Rule

Also here, we rely on the CircleCI aws-cli Orb to simplify the AWS CLI configurations. And in the jobs part of .circleci/config.yml, I'm more or less just passing in the parameters for the update_batch_job_definition command. Those parameters were created on AWS and added to this project's env variables on CircleCI. The BATCH_DEPLOY_ROLE is the ARN of the AWS IAM that has policies attached to it that allows it to list + update Batch Jobs and list + update CloudWatch Events Rules.

jobs:

# ...

update_batch_job_definition_dev:

executor: aws-cli/default

steps:

- update_batch_job_definition:

aws-access-key-id: BATCH_DEPLOY_ACCESS_KEY_ID

aws-secret-access-key: BATCH_DEPLOY_SECRET_ACCESS_KEY

aws-region: DEV_REGION

batch-deploy-role: $DEV_BATCH_DEPLOY_ROLE

batch-job-definition-name: $DEV_BATCH_JOB_DEFINITION_NAME

update_batch_job_definition_prod:

executor: aws-cli/default

steps:

- update_batch_job_definition:

aws-access-key-id: BATCH_DEPLOY_ACCESS_KEY_ID

aws-secret-access-key: BATCH_DEPLOY_SECRET_ACCESS_KEY

aws-region: PROD_REGION

batch-deploy-role: $PROD_BATCH_DEPLOY_ROLE

batch-job-definition-name: $PROD_BATCH_JOB_DEFINITION_NAME

I'm using CircleCI's commands to create re-usable code snippets so I can avoid writing the same code for develop and for main. Let me show you the configuration in it's entirety, and we'll go through it step-by-step afterwards.

commands:

update_batch_job_definition:

description: Register a new Batch Job with new Docker Image and update CloudWatch Events Rule with new Batch Job's ARN

parameters:

aws-access-key-id:

type: string

aws-secret-access-key:

type: string

aws-region:

type: string

batch-deploy-role:

type: string

batch-job-definition-name:

type: string

cloud-watch-event-rule:

type: string

steps:

- aws-cli/setup:

aws-access-key-id: << parameters.aws-access-key-id >>

aws-secret-access-key: << parameters.aws-secret-access-key >>

aws-region: << parameters.aws-region >>

profile-name: default

- run:

name: Setup batch_deploy user

command: |

aws configure set role_arn << parameters.batch-deploy-role >> --profile batch_deploy

aws configure set region $<< parameters.aws-region >> --profile batch_deploy

aws configure set source_profile default --profile batch_deploy

- run:

name: Register a new Batch Job with new Docker Image and update CloudWatch Events Rule with new Batch Job's ARN

command: |

sudo apt-get install jq

LATEST_JOB_DEFINITION=$(aws --profile batch_deploy batch describe-job-definitions \

--job-definition-name << parameters.batch-job-definition-name >> \

--status ACTIVE \

--max-items 1 \

--query 'jobDefinitions[0].{type:type,jobDefinitionName:jobDefinitionName,containerProperties:containerProperties,retryStrategy:retryStrategy,timeout:timeout}')

NEW_DOCKER_IMAGE=$AWS_ECR_ACCOUNT_URL/$AWS_ECR_REPO:$CIRCLE_SHA1

NEW_JOB_DEFINITION=$(echo $LATEST_JOB_DEFINITION | jq '.containerProperties.image = "'$NEW_DOCKER_IMAGE'"')

echo -e "\nThis will be the new job definition:"

echo $NEW_JOB_DEFINITION | jq

echo -e "\nRegister new job definition and return it's new ARN:"

BATCH_JOB_DEFINITION_ARN=$(aws --profile batch_deploy batch register-job-definition --cli-input-json "$NEW_JOB_DEFINITION" --query jobDefinitionArn)

LIST_TARGETS_OUTPUT=$(aws --profile batch_deploy events list-targets-by-rule \

--rule << parameters.cloud-watch-event-rule >> \

--query Targets[0])

NEW_TARGETS_DEFINITION=$(echo $LIST_TARGETS_OUTPUT | jq '(.BatchParameters.JobDefinition) |= '$BATCH_JOB_DEFINITION_ARN'')

echo -e "\nThis will be the rule's new taget:"

echo $NEW_TARGETS_DEFINITION | jq

aws --profile batch_deploy events put-targets \

--rule << parameters.cloud-watch-event-rule >> \

--targets "$NEW_TARGETS_DEFINITION"

Now, let's have a more detailed look at what the last step: "Register a new Batch Job with new Docker Image and update CloudWatch Events Rule with new Batch Job's ARN" does because that the interesting part.

- We install jq, because we'll need it later to manipulate some JSON. JSON syntax is being used extensively by AWS CLI to configure AWS resources.

sudo apt-get install jq

- We grab the current Job Definition and extract its configurations: type, jobDefinitionName, containerProperties, retryStrategy and timeout into a JSON object, and assign it to the variable LATEST_JOB_DEFINITION. We'll need these values later when we create the new Job Definition.

LATEST_JOB_DEFINITION=$(aws --profile batch_deploy batch describe-job-definitions \

--job-definition-name << parameters.batch-job-definition-name >> \

--status ACTIVE \

--max-items 1 \

--query 'jobDefinitions[0].{type:type,jobDefinitionName:jobDefinitionName,containerProperties:containerProperties,retryStrategy:retryStrategy,timeout:timeout}')

If you'd output LATEST_JOB_DEFINITION at this moment, it would look like this:

echo $LASTEST_JOB_DEFINITION | jq

{

"retryStrategy": {

"attempts": 5

},

"containerProperties": {

"mountPoints": [],

"image": "********************************************/********************:%YOUR_PREVIOUS_GIT_COMMIT_SHA1",

"environment": [

{

"name": "S3_BUCKET_NAME",

"value": "******************"

},

{

"name": "S3_KEY_VALUE",

"value": "*************"

}

],

"vcpus": 1,

"jobRoleArn": "*********************************************************",

"volumes": [],

"memory": 1024,

"resourceRequirements": [],

"command": [

"echo",

"Hello, world!"

],

"ulimits": []

},

"type": "container",

"timeout": {

"attemptDurationSeconds": 3000

},

"jobDefinitionName": "*******"

}

- We interpolate the name for the new Docker Image and assign it to NEW_DOCKER_IMAGE:

NEW_DOCKER_IMAGE=$AWS_ECR_ACCOUNT_URL/$AWS_ECR_REPO:$CIRCLE_SHA1

- We use jq to replace the current Job Definition's Docker Image with NEW_DOCKER_IMAGE:

NEW_JOB_DEFINITION=$(echo $LATEST_JOB_DEFINITION | jq '.containerProperties.image = "'$NEW_DOCKER_IMAGE'"')

- And then register the new Job Definition with the newly create NEW_JOB_DEFINITION JSON and let AWS CLI return its ARN, which we then assign to BATCH_JOB_DEFINITION_ARN. We'll use this to update our CloudWatch Events Rule.

BATCH_JOB_DEFINITION_ARN=$(aws --profile batch_deploy batch register-job-definition --cli-input-json "$NEW_JOB_DEFINITION" --query jobDefinitionArn)

echo $BATCH_JOB_DEFINITION_ARN

"arn:aws:batch:*********:$aws_account_id:job-definition/*******:42"

- Updating the CloudWatch Events Rule follows the same pattern as registering a new Batch Job Definition:

We get the latest Rule and query for its Target's definition:

LIST_TARGETS_OUTPUT=$(aws --profile batch_deploy events list-targets-by-rule \

--rule << parameters.cloud-watch-event-rule >> \

--query Targets[0])

We replace the value of the Targe's "Job Definition" with our newly registered BATCH_JOB_DEFINITION_ARN with the help of jq.

NEW_TARGETS_DEFINITION=$(echo $LIST_TARGETS_OUTPUT | jq '.BatchParameters.JobDefinition = "'$BATCH_JOB_DEFINITION_ARN'"')

We update the Target of the Event Rule with our NEW_TARGETS_DEFINITION:

aws --profile batch_deploy events put-targets \

--rule << parameters.cloud-watch-event-rule >> \

--targets "$NEW_TARGETS_DEFINITION"

And we're done!

Postscriptum

To be honest, I have no clue if we're being geniuses or idiots by doing the whole thing this way. 😅

For one of our previous iterations of this script, instead of retrieving the current definition as JSON and then just replace the parts that need to be changed with jq, we hardcoded the entire JSON value by value. Not only did we have to configure a lot more env variables in CircleCI, but it also let to the issue that whenever we wanted to reconfigure our Batch Job Definition, we had to update the script and deploy the entire thing. But because it's the faster thing to do, we sometimes manually reconfigured the Batch Job via AWS's UI, but then forgot to update the script. That let to the next deployment simply reverting all the manual changes. By doing it this way, we at least got a single source of truth.

I worked very closely with our DevOps engineer Mary Rose Quito, who was very, very, very patient with all of my AWS questions and my countless failed attempts. So thank you very much, MR!